Overview of the Multivariate Embedding Platform

Overview of the Multivariate Embedding Platform

The Multivariate Embedding platform performs dimension reduction, which maps points from a high-dimensional space, {x1, x2,..., xn}, to points in a low-dimensional space, {y1, y2,..., yn}. The goal of dimension reduction is to map points to the lower dimension while still retaining the important information present in the high-dimensional data. The specific techniques used in the Multivariate Embedding platform are the Uniform Manifold Approximation and Projection (UMAP) method and the t-Distributed Stochastic Neighbor Embedding (t-SNE) method. The UMAP method is a manifold learning technique, which is also known as nonlinear dimension reduction. This technique is based in Riemannian geometry and algebraic topology (May, 1992). The t-SNE method is a variation of Stochastic Neighbor Embedding (Hinton and Roweis, 2002).

Both dimension reduction methods that are available in the Multivariate Embedding platform are k neighbor-based learning algorithms. These types of algorithms first find near neighbors for each point to create a k neighbor graph in the high-dimensional space. Then, a low-dimensional mapping is created to map points from the high-dimensional space to the low-dimensional space while preserving the structure of the graph.

Overview of the UMAP Method

The UMAP method first finds near neighbors for each point and then a k neighbor graph is created to form a topological structure. Using the default settings, each point is connected to at least one other point, the nearest neighbor, and it is not connected to any neighbors after the 15th neighbor. The neighbors in between form a fuzzy area. The topological representation of the high-dimensional data is then created by merging the edges of the fuzzy areas together. For more information about how the edges are merged, see McInnes et al. (2018).

To create a low-dimensional mapping, UMAP minimizes the cross-entropy between the high-dimensional topological representation and the low-dimensional topological representation using gradient descent (McInnes et al., 2018). The UMAP method preserves the global structure of the data while minimizing computation time and is able to handle extremely large data sets.

Overview of the t-SNE Method

The t-SNE method is based on the pairwise similarities between points. Each pairwise similarity is represented by the conditional probability that two points are neighbors. In the high-dimensional space, the distances are converted into conditional probabilities using the Gaussian distribution. In the low-dimensional map, the distances are converted into probabilities using the Student’s t distribution with one degree of freedom. This is where the t-SNE method gets its name (van der Maaten and Hinton, 2008).

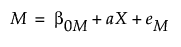

For a good low-dimensional mapping, the pairwise similarity between {xi, xj} in the high-dimensional space is the same as the pairwise similarity between {yi, yj} in the low-dimensional space. Under this assumption, the t-SNE method finds a low-dimensional mapping that minimizes the difference between the high-dimensional similarity and the low-dimensional similarity. The difference is measured using a version of the Kullback-Leibler divergence, which is then minimized using gradient descent. For more information about the t-SNE method, see Statistical Details for the Multivariate Embedding Platform.