Example of Support Vector Machines

Example of Support Vector Machines

In this example, you use the Tuning Design option to fit several Support Vector Machine models with different kernel functions and parameter values. You want to find the best classification model to predict disease progression in patients who have diabetes. You have baseline medical data and a binary measure of diabetes disease progression obtained one year after each patient’s initial visit. This measure quantifies disease progression as either Low or High

1. Select Help > Sample Data Folder and open Diabetes.jmp.

2. Select Analyze > Predictive Modeling > Support Vector Machines.

3. Select Y Binary and click Y, Response.

4. Select Age through Glucose and click X, Factor.

5. Select Validation and click Validation.

6. Click OK.

7. In the Model Launch control panel, check that the kernel function is a Radial Basis Function and select Tuning Design.

8. Enter 10 next to Number of Runs.

9. Click Go.

10. Click the gray triangle next to Model Launch to open the Model Launch control panel.

11. Change the kernel function to a Linear function and select Tuning Design.

12. Enter 10 next to Number of Runs.

13. Click Go.

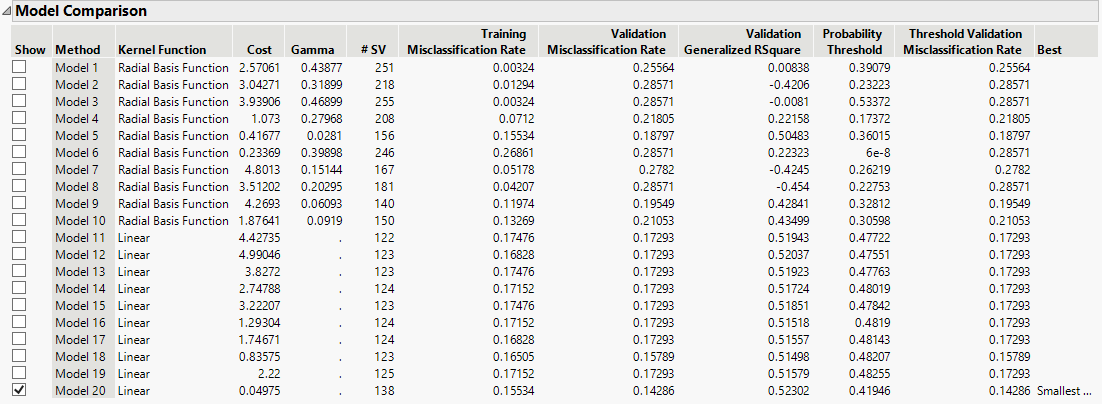

Figure 9.2 Model Comparison Report

The Model Comparison report shows that the best model in terms of misclassification rate and RSquare is Model 20. This model has a linear kernel function with cost parameter 0.04975. This is the model to further analyze.

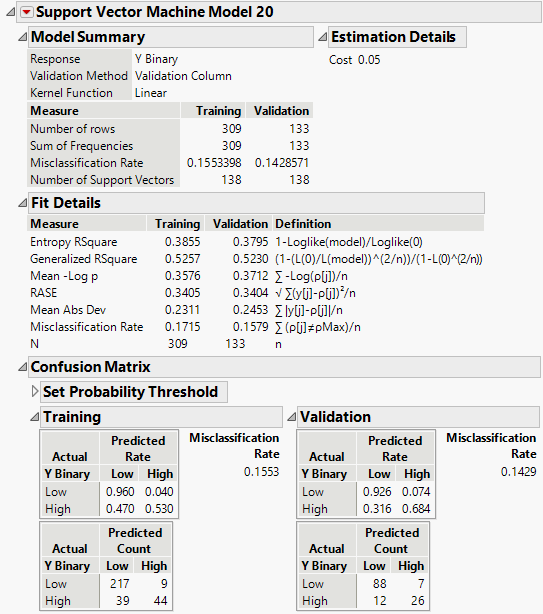

Figure 9.3 Model Report for Best Fitting Model

The Model Summary report shows that the misclassification rates for the training and validation sets are very similar. This is a good indication that the model did not overfit the data. The confusion matrices provide more information about the types of observations that are misclassified by the model. In the confusion matrices, the upper left corner shows that the model correctly categorizes the Low responses most of the time (96% in training and 92.6% in validation). However, fewer of the High responses are correctly categorized (53% in training and 68.4% in validation). Therefore, most of the misclassifications are the High responses being misclassified as Low.

Note: Results vary due to the random nature of the design points in the tuning design.