Example of the Assess Measurement Model Report

A confirmatory factor analysis model (CFA) enables you to test alternative measurement models. The Assess Measurement Model report provides tools for quantifying the reliability and validity of tests and measures. The results include indicator reliability, coefficients Omega and H, and a construct validity matrix.

In this example, you are assessing the validity and reliability of a consumer data survey. You fit a confirmatory factor analysis model for five latent variables: Privacy, Security, Reputation, Trust, and Purchase Intent. You then use the Assess Measurement Model option to test the survey reliability.

1. Select Help > Sample Data Folder and open Online Consumer Data.jmp.

2. Click the green triangle next to the SEM: CFA script.

The script runs a confirmatory factor analysis model for the survey data.

3. Click the gray disclosure icons next to Model Specification and Model Comparison to hide those parts of the report.

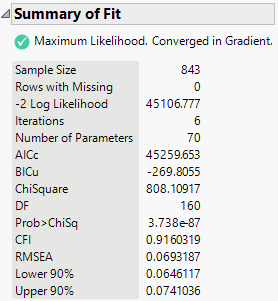

Figure 8.13 Summary of Fit for CFA Model

The chi-square statistic for this model, listed in the Summary of Fit report, is 808.11 with 160 degrees of freedom. Note that the corresponding p-value is significant. This indicates that there is some evidence to reject the null hypothesis that the model fits well. However, the chi-square statistic is strongly influenced by sample size and has been shown to be significant even when the model provides good fit to the data in samples of over 200 to 300 observations. In this example, the sample size is 843. Thus, the fit of the model should also be assessed with the CFI and RMSEA fit indices. In this example, both indicate good fit as they are greater than 0.9 and less than 0.1, respectively.

4. Click the red triangle next to Structural Equation Model: CFA and select Assess Measurement Model.

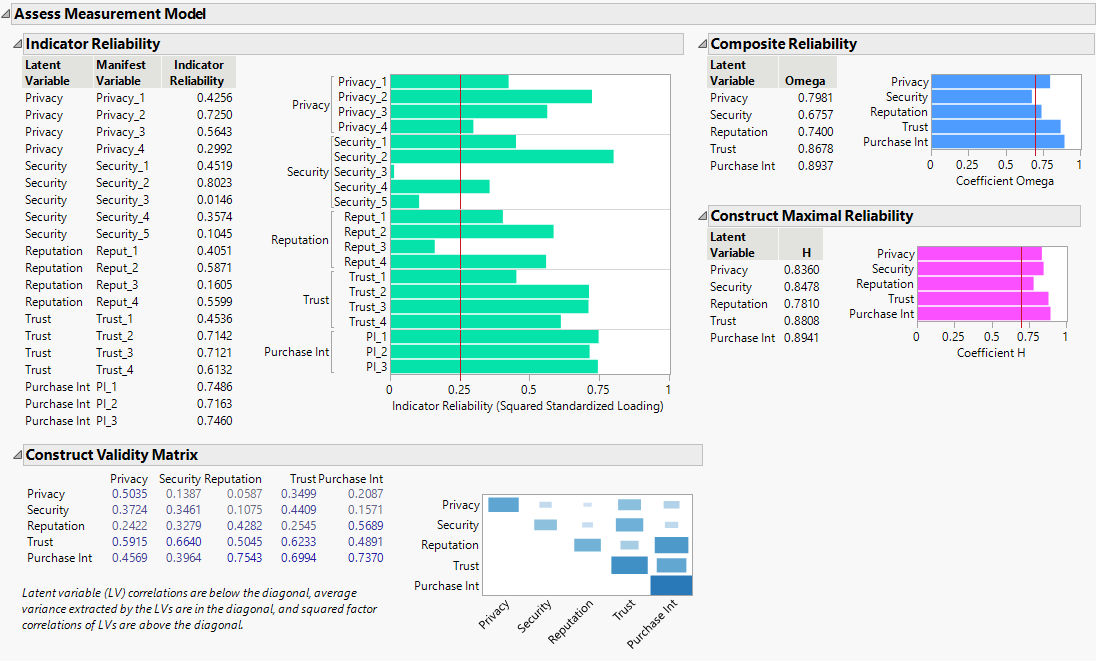

Figure 8.14 Assess Measurement Model Report for CFA Model

The Indicator Reliability plot shows the squared standardized loadings of the latent variables along with a suggested minimum threshold for acceptable reliability (0.25). You can see that two of the Security-related questions and one of the Reputation-related questions do not do a very good job of capturing variability in the corresponding latent variable. The Security_3 question has such a low value that it could be removed without nearly any loss to the reliability of the construct, whereas the Security_5 and Reput_3 questions could be revised in an attempt to improve their reliability.

The Composite Reliability and Construct Maximal Reliability reports show the coefficients Omega and H, respectively, for each latent variable. These values range from 0 to 1, and it is recommended that they are about 0.70 or greater. Omega represents the proportion of variance of the latent variable(s) in the observed composite score(s). H represents the proportion of latent variable variance represented by the indicators. By these measures, the Omega coefficient for Security is just below the suggested threshold of 0.70, but it is close enough to deem the composite reliable for research purposes. The suggested thresholds should be used in the context of the goals for the survey; if you plan to use composite scores to make decisions about individuals, then reliability should be higher than the suggested threshold (around 0.90 or greater) but if you plan to use composite scores for research purposes, then the lower end of the threshold is acceptable (Nunnally 1978). Composite reliability for Security and Reputation could be improved by focusing on improving the indicator reliability for the Security_3, Security_5, and Reput_4 questions.

The Construct Validity Matrix report provides a way to determine whether latent variables are measuring what you think they are measuring. Notice in the matrix visualization that the diagonal values for Privacy, Trust, and Purchase Intent are larger than the values above and to the right of them. However, this is not the case for Security and Reputation. This is further evidence that the Security-related and Reputation-related questions can be improved to measure the Security and Reputation latent variables.

You conclude that the survey could be improved by removing or revising some of the questions related to Security and Reputation.

For more information about the components of the report, see Assess Measurement Model.