Lack of Fit

In the Fit Least Squares report, the Lack of Fit option gives details for a test that assesses whether the model fits the data well. The Lack of Fit report appears only when it is possible to conduct this test. The test relies on the ability to estimate the variance of the response using an estimate that is independent of the model. Constructing this estimate requires that response values are available at replicated values of the model effects. The test involves computing an estimate of pure error, based on a sum of squares, using these replicated observations.

In the following situations, the Lack of Fit report does not appear because the test statistic cannot be computed:

• There are no replicated points with respect to the X variables, so it is impossible to calculate a pure error sum of squares.

• The model is saturated, meaning that there are as many estimated parameters as there are observations. Such a model fits perfectly, so it is impossible to assess lack of fit.

The difference between the error sum of squares from the model and the pure error sum of squares is called the lack of fit sum of squares. The lack of fit variation can be significantly greater than pure error variation if the model is not adequate. For example, you might have the wrong functional form for a predictor, or you might not have enough, or the correct, interaction effects in your model.

The Lack of Fit report contains the following columns:

Source

The three sources of variation: Lack of Fit, Pure Error, and Total Error.

DF

The degrees of freedom (DF) for each source of error:

– The DF for Total Error is the same as the DF value found on the Error line of the Analysis of Variance table. Based on the sum of squares decomposition, the Total Error DF is partitioned into degrees of freedom for Lack of Fit and for Pure Error.

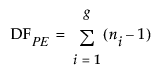

– The Pure Error DF is pooled from each replicated group of observations. In general, if there are g groups and if each group has identical settings for each effect, the pure error DF, denoted DFPE, is defined as follows:

where ni is the number of replicates in the ith group.

– The Lack of Fit DF is the difference between the Total Error and Pure Error DFs.

Sum of Squares

The associated sum of squares (SS) for each source of error:

– The Total Error SS is the sum of squares found on the Error line of the corresponding Analysis of Variance table.

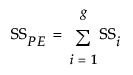

– The Pure Error SS is the total of the sum of squares values for each replicated group of observations. The Pure Error SS divided by its DF estimates the variance of the response at a given predictor setting. This estimate is unaffected by the model. In general, if there are g groups and if each group has identical settings for each effect, the Pure Error SS, denoted SSPE, is defined as follows:

where SSi is the sum of the squared differences between each observed response and the mean response for the ith group.

– The Lack of Fit SS is the difference between the Total Error and Pure Error sum of squares.

Mean Square

The mean square for the Source, which is the Sum of Squares divided by the DF. A Lack of Fit mean square that is large compared to the Pure Error mean square suggests that the model is not fitting well. The F ratio provides a formal test.

F Ratio

The ratio of the Mean Square for Lack of Fit to the Mean Square for Pure Error. The F Ratio tests the hypothesis that the variances estimated by the Lack of Fit and Pure Error mean squares are equal, which is interpreted as representing “no lack of fit”.

Prob > F

The p-value for the Lack of Fit test. A small p-value indicates a significant lack of fit.

Max RSq

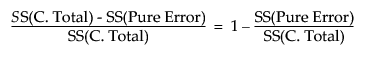

The maximum RSquare that can be achieved by a model based only on these effects. The Pure Error Sum of Squares is invariant to the form of the model. So the largest amount of variation that a model with these replicated effects can explain equals:

This formula defines the Max RSq.