Overview of the Explore Missing Values Platform

The Explore Missing Values platform provides several methods to identify and understand the missing values in your data.

• Multivariate Normal Imputation

• Multivariate RPCA Imputation

Multivariate Normal Imputation

The Multivariate Normal Imputation method in the Explore Missing Values platform imputes missing values based on the multivariate normal distribution. The procedure requires that all variables have a Continuous modeling type. The algorithm uses least squares imputation. The covariance matrix is constructed using pairwise covariances. The diagonal entries (variances) are computed using all nonmissing values for each variable. The off-diagonal entries for any two variables are computed using all observations that are nonmissing for both variables. In cases where the covariance matrix is singular, the algorithm uses minimum norm least squares imputation based on the Moore-Penrose pseudo-inverse.

Multivariate Normal Imputation allows the option to use a shrinkage estimator for the covariances. The use of shrinkage estimators is a way of improving the estimation of the covariance matrix. For more information about shrinkage estimators, see Schäfer and Strimmer (2005).

Note: If a validation column is specified, the covariance matrices are computed using observations from the Training set.

Multivariate SVD Imputation

The Multivariate SVD Imputation method in the Explore Missing Values platform imputes missing values using the singular value decomposition (SVD). This method is useful for data with hundreds or thousands of variables. Because SVD calculations do not require calculation of a covariance matrix, the SVD method is recommended for wide problems that contain large numbers of variables. The procedure requires that all variables have a Continuous modeling type.

The singular value decomposition represents a matrix of observations X as X = UDV′, where U and V are orthogonal matrices and D is a diagonal matrix.

The SVD algorithm used in the Multivariate SVD Imputation method is the sparse Lanczos method, also known as the implicitly restarted Lanczos bidiagonalization method (IRLBA). See Baglama and Reichel (2005). The Multivariate SVD Imputation algorithm does the following:

1. Each missing value is replaced with its column’s mean.

2. An SVD decomposition is performed on the matrix of observations, X.

3. Each cell that had a missing value is replaced by the corresponding element of the UDV′ matrix obtained from the SVD decomposition.

4. Steps 2 and 3 are repeated until the SVD converges to the matrix X or the maximum number of iterations is reached.

Multivariate RPCA Imputation

The Multivariate Robust PCA method in the Explore Missing Values platform imputes missing values using robust principal components, which replaces missing values using a low-rank matrix factorization (SVD) that is robust to outliers. This is the same method that is used in the Robust PCA Outliers method in the Explore Outliers platform. See “Robust PCA Outliers”. This method is useful for wide problems, but can sometimes be computationally expensive for very large dimensions. The procedure requires that all variables have a Continuous modeling type.

Automated Data Imputation

Automated Data Imputation

The Automated Data Imputation (ADI) method in the Explore Missing Values platform imputes missing values using a low-rank matrix approximation method, also known as matrix completion. Once trained, the ADI model is capable of performing missing data imputations for streaming data through scoring formulas. Streaming data are added rows of observations that become available over time and were not used for tuning or validating the imputed model. This method is flexible, robust, and automated to select the best dimension for the low-rank approximation. These features enable ADI to work well for many different types of data sets.

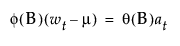

A low-rank approximation of a matrix is of the form X = UDV′ and can be viewed as an extension of singular value decomposition (SVD). ADI uses the Soft-Impute method as the imputation model and is designed such that the data determines the rank of the low-rank approximation.

The ADI algorithm performs the following steps:

1. The data are partitioned into training and validation sets.

2. Each set is centered and scaled using the observed values from the training set.

3. For each partitioned data set, additional missing values are added within each column and are referred to as induced missing (IM) values.

4. The imputation model is fit on the training data set along a solution path of tuning parameters. The IM values are used to determine the best value for the tuning parameter.

5. Additional rank reduction is performed using the training data set by de-biasing the results from the chosen imputation model in step 4.

6. Final rank reduction is performed to calibrate the model for streaming data and to prevent overfitting. This is done by fitting the imputation model on the validation set, using the rank determined in step 5 as an upper bound.

Note: The Automated Data Imputation option requires a column to contain at least two nonmissing values to impute values for that column. If the algorithm is unable to find a low-rank approximation, the mean is imputed for continuous variables and the mode is imputed for categorical variables. These values are calculated from the training set.